The new cloud offering should be 100% carbon neutral and will run on the Cray supercomputer, HPE said.

The new supercomputing cloud service GreenLake for Large Language Models will be available in late 2023 or early 2024 in the U.S., Hewlett Packard Enterprise announced at HPE Discover on Tuesday. GreenLake for LLMs will allow enterprises to train, tune and deploy large-scale artificial intelligence that is private to each individual business.

GreenLake for LLMs will be available to European customers following the U.S. release, with an anticipated release window in early 2024.

Jump to:

HPE partners with AI software startup Aleph Alpha

“AI is at an inflection point, and at HPE we are seeing demand from various customers beginning to leverage generative AI,” said Justin Hotard, executive vice president and general manager for HPC & AI Business Group and Hewlett Packard Labs, in a virtual presentation.

GreenLake for LLMs runs on an AI-native architecture spanning hundreds or thousands of CPUs or GPUs, depending on the workload. This flexibility within one AI-native architecture offering makes it more efficient than general-purpose cloud options that run multiple workloads in parallel, HPE said. GreenLake for LLMs was created in partnership with Aleph Alpha, a German AI startup, which provided a pre-trained LLM called Luminous. The Luminous LLM can work in English, French, German, Italian and Spanish and can use text and images to make predictions.

The collaboration went both ways, with Aleph Alpha using HPE infrastructure to train Luminous in the first place.

“By using HPE’s supercomputers and AI software, we efficiently and quickly trained Luminous,” said Jonas Andrulis, founder and CEO of Aleph Alpha, in a press release. “We are proud to be a launch partner on HPE GreenLake for Large Language Models, and we look forward to expanding our collaboration with HPE to extend Luminous to the cloud and offer it as-a-service to our end customers to fuel new applications for business and research initiatives.”

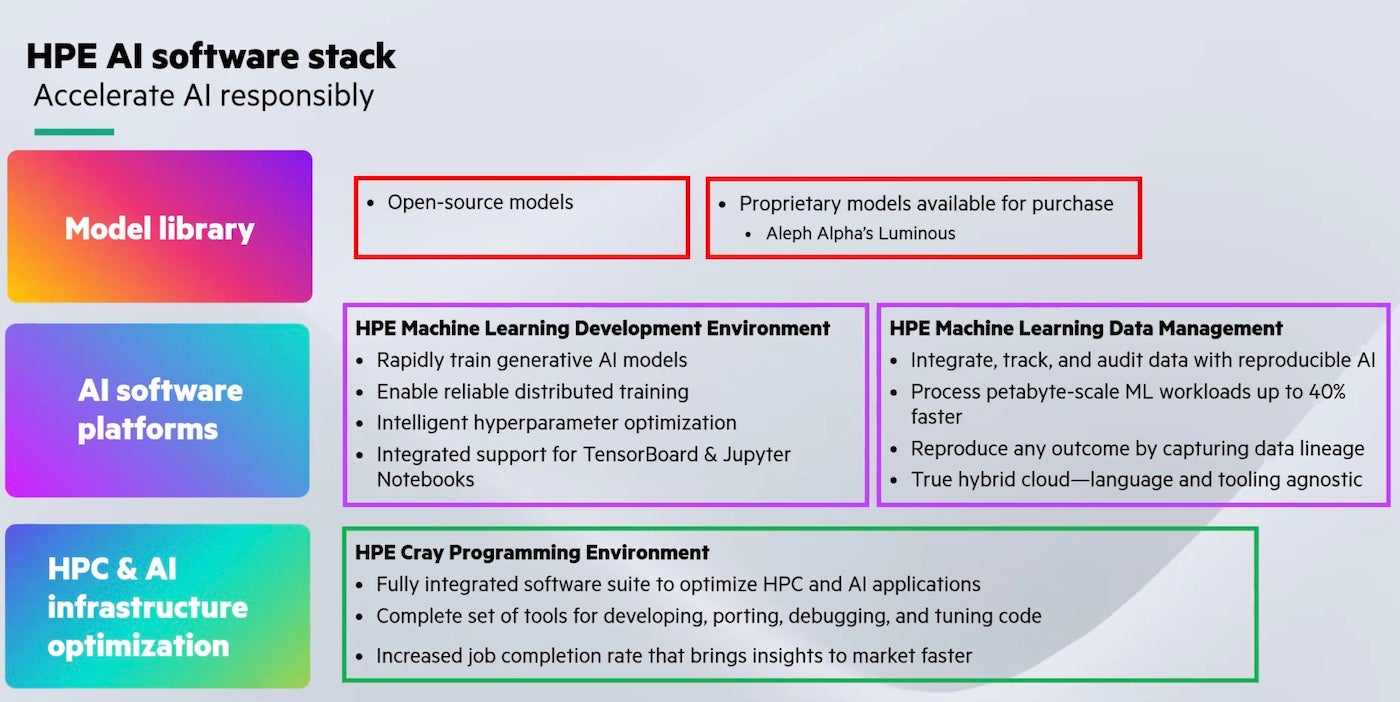

The initial launch will include a set of open-source and proprietary models for retraining or fine-tuning. In the future, HPE expects to provide AI specialized for tasks related to climate modeling, healthcare, finance, manufacturing and transportation.

For now, GreenLake for LLMs will be part of HPE’s overall AI software stack (Figure A), which includes the Luminous model, machine learning development, data management and development programs, and the Cray programming environment.

Figure A

HPE’s Cray XD supercomputers enable enterprise AI performance

GreenLake for LLM runs on HPE’s Cray XD supercomputers and NVIDIA H100 GPUs. The supercomputer and HPE Cray Programming Environment allow developers to do data analytics, natural language tasks and other work on high-powered computing and AI applications without having to run their own hardware, which can be costly and require expertise specific to supercomputing.

Large-scale enterprise production for AI requires massive performance resources, skilled people, and security and trust, Hotard pointed out during the presentation.

SEE: NVIDIA offers AI tenancy on its DGX supercomputer.

Getting more power out of renewable energy

By using a colocation facility, HPE aims to power its supercomputing with 100% renewable energy. HPE is working with a computing center specialist, QScale, in North America on a design built specifically for this purpose.

“In all of our cloud deployments, the objective is to provide a 100% carbon-neutral offering to our customers,” said Hotard. “One of the benefits of liquid cooling is you can actually take the wastewater, the heated water, and reuse it. We have that in other supercomputer installations, and we’re leveraging that expertise in this cloud deployment as well.”

Alternatives to HPE GreenLake for LLMs

Other cloud-based services for running LLMs include NVIDIA’s NeMo (which is currently in early access), Amazon Bedrock, and Oracle Cloud Infrastructure.

Hotard noted in the presentation that GreenLake for HPE will be a complement to, not a replacement for, large cloud services like AWS and Google Cloud Platform.

“We can and intend to integrate with the public cloud. We see this as a complimentary offering; we don’t see this as a competitor,” he said.