Google’s latest annual environmental report reveals the true impact its recent forays into artificial intelligence has had on its greenhouse gas emissions.

The expansion of its data centres to support AI developments contributed to the company producing 14.3 million tonnes of carbon dioxide equivalents in 2023. This represents a 48% increase over the equivalent figure for 2019 and a 13% increase since 2022.

“This result was primarily due to increases in data center energy consumption and supply chain emissions,” the report’s authors wrote.

“As we further integrate AI into our products, reducing emissions may be challenging due to increasing energy demands from the greater intensity of AI compute, and the emissions associated with the expected increases in our technical infrastructure investment.”

SEE: How Microsoft, Google Cloud, IBM & Dell are Working on Reducing AI’s Climate Harms

Google claims it cannot distinguish the component of overall data centre emissions that AI is responsible for

In 2021, Google pledged to reach net-zero emissions across all its operations and value chain by 2030. The report states this goal is now deemed “extremely ambitious” and “will require (Google) to navigate significant uncertainty.”

The report goes on to say the environmental impact of AI is “complex and difficult to predict,” so the company can only publish data centre-wide metrics as a whole, which lumbers in cloud storage and other operations. This means the environmental damage inflicted specifically as a result of AI training and use in 2023 is being kept under wraps for now.

That being said, in 2022, David Patterson, a Google engineer, wrote in a blog, “Our data shows that ML training and inference are only 10%–15% of Google’s total energy use for each of the last three years.” However, this proportion is likely to have increased since then.

SEE: All You Need to Know About Greentech

Why AI is responsible for tech companies’ increased emissions

Like most of its competitors, Google has introduced a number of AI projects and features over the last year, including Gemini, Gemma, Overviews and image generation in Search and AI security tools.

AI systems, particularly those involved in training large language models, demand substantial computational power. This translates into higher electricity usage and, consequently, more carbon emissions than normal online activity.

SEE: Artificial Intelligence Cheat Sheet

According to a study by Google and UC Berkeley, training OpenAI’s GPT-3 generated 552 metric tonnes of carbon — the equivalent to driving 112 petrol cars for a year. Furthermore, studies estimate that a generative AI system uses around 33 times more energy than machines running task-specific software.

Last year, Google’s total data centre electricity consumption grew by 17%, and while we don’t know what proportion of this was due to AI-related activities, the company did admit it “expect(s) this trend to continue in the future.”

Google is not the first of the big tech organisations to reveal that AI developments are taking a toll on its emissions and that they are proving difficult to manage. In May, Microsoft announced that its emissions were up 29% from 2020, primarily as a result of building new data centres. “Our challenges are in part unique to our position as a leading cloud supplier that is expanding its data centers,” Microsoft’s environmental sustainability report said.

Leaked documents viewed by Business Insider in April reportedly show Microsoft has obtained more than 500MW of additional data centre space since July 2023 and that its GPU footprint now supports live “AI clusters” in 98 locations globally.

Four years ago, Microsoft President Brad Smith referred to the company’s pledge to become carbon negative by 2030 as a “moonshot.” However, in May, he admitted that “the moon has moved” since then and is now “more than five times as far away,” via Bloomberg’s Zero podcast.

Alex de Vries, the founder of digital trend analysis platform Digiconimist, which tracks AI sustainability, thinks that Google and Microsoft’s environmental reports prove that tech bosses are not taking sustainability as seriously as AI development. “On paper they might say so, but the reality is that they are currently clearly prioritising growth over meeting those climate targets,” he told TechRepublic in an email.

“Google is already struggling to fuel its growing energy demand from renewable energy sources. The carbon intensity of every MWh consumed by Google is rising rapidly. Globally we only have a limited supply of renewable energy sources available and the current trajectory of AI-related electricity demand is already too much. Something will have to change drastically to make those climate targets achievable.”

Google’s skyrocketing emissions could also have a trickle-down impact on the businesses using its AI products, which have their own environmental goals and regulations to comply with. “If Google is part of your value chain, Google’s emissions going up also means your Scope 3 emissions are going up,” de Vries told TechRepublic.

How Google is managing its AI emissions

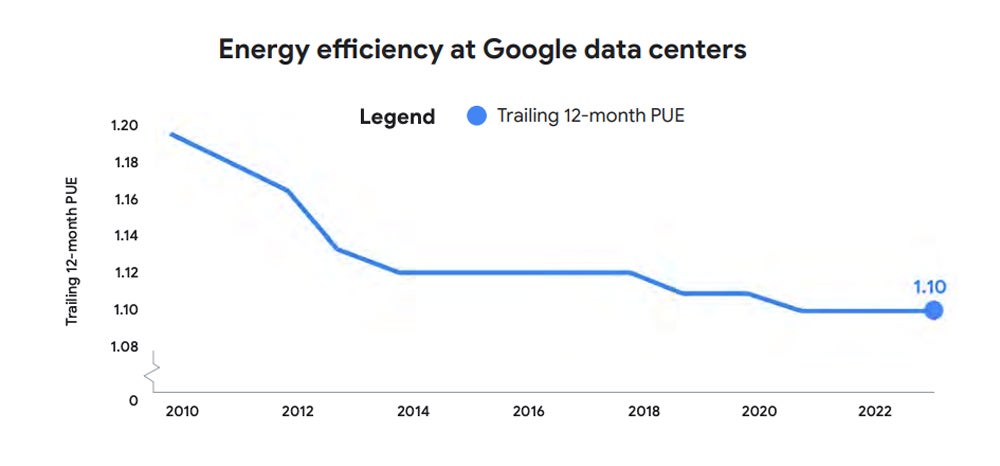

Google’s environmental report highlights a number of ways the company is managing the energy demands of its AI developments. Its latest Tensor Processing Unit, Trillium, is over 67% more energy efficient than the fifth generation, while its data centres are over 1.8 times more energy-efficient than typical enterprise data centres.

Google’s data centres also now deliver approximately four times as much computing power with the same amount of electrical power compared to five years ago.

In March 2024 at NVIDIA GTC, TechRepublic spoke with Mark Lohmeyer, vice president and general manager of compute and AI/ML Infrastructure at Google Cloud, about how its TPUs are getting more efficient.

He said, “If you think about running a highly efficient form of accelerated compute with our own in-house TPUs, we leverage liquid cooling for those TPUs that allows them to run faster, but also in a much more energy efficient and as a result a more cost effective way.”

Google Cloud also uses software to manage up-time sustainably. “What you don’t want to have is a bunch of GPUs or any type of compute deployed using power but not actively producing, you know, the outcomes that we’re looking for,” Lohmeyer told TechRepublic. “And so driving high levels of utilisation of the infrastructure is also key to sustainability and energy efficiency.”

Google’s 2024 environmental report says the company is managing the environmental impact of AI in three ways:

- Model optimisation: For example, it boosted the training efficiency of its fifth-generation TPU by 39% with techniques that accelerate training, like quantisation, where the precision of numbers used to represent the model’s parameters is reduced to decrease the computational load.

- Efficient infrastructure: Its fourth-generation TPU was 2.7 times more energy-efficient than the third generation. In 2023, Google’s water stewardship program offset 18% of its water usage, of which much goes into cooling data centres.

- Emissions reduction: Last year, 64% of the energy consumed by Google’s data centres came from carbon-free sources, which include renewable sources and carbon capture schemes. It also deployed carbon-intelligent computing platforms and demand response capabilities at its data centres.

In addition, Google’s AI products are being designed to address climate change in general, like fuel-efficient routing in Google Maps, flood prediction models, and the Green Light tool that helps engineers optimise the timing of traffic lights to reduce stop-and-go traffic and fuel consumption.

AI demand could overwhelm emissions goals

Google states the electricity consumption of its data centres — which power its AI activities, amongst other things — currently only makes up about 0.1% of global electricity demand. Indeed, according to the International Energy Agency, data centres and data transmission networks are responsible for 1% of energy-related emissions.

However, this is expected to rise significantly in the next few years, with data centre electricity consumption projected to double between 2022 and 2026. According to SemiAnalysis, data centres will consume about 4.5% of global energy demand by 2030.

Considerable amounts of energy are required for the training and operation of AI models in data centres, but the manufacturing and transportation of the chips and other hardware also contribute. The IEA has estimated that AI specifically will use 10 times as much electricity in 2026 as it did in 2023, thanks to the rising demand.

SEE: AI Causing Foundational Data Centre Power and Cooling Conundrum in Australia

Data centres also need huge amounts of water for cooling and even more so when running energy-intensive AI computations. One study from UC Riverside found the amount of water withdrawn for AI activities could reach the equivalent of half of the U.K.’s annual consumption by 2027.

Increased demand for electricity could push tech companies back to non-renewable energy

Tech companies have long been large investors in renewable energy, with Google’s latest environmental report stating it purchased more than 25 TWh-worth in 2023 alone. However, there are concerns the skyrocketing demand for energy as a result of their AI pursuits will keep coal and oil-powered plants in business that would have otherwise been decommissioned.

For example, in December, county supervisors in northern Virginia approved for up to 37 data centres to be built across just 2,000 acres, leading to proposals for expanding coal power usage.